In the past years, AI agents have been everywhere across industries, autonomously and independently operating over extended periods using various tools to accomplish complex tasks.

With the advent of numerous frameworks for building these AI agents, observability and DevTool platforms for AI agents have become essential in artificial intelligence. These platforms provide developers with powerful tools to monitor, debug, and optimize AI agents, ensuring their reliability, efficiency, and scalability. Let’s explore the key features of these platforms and examine some code examples to illustrate their practical applications.

Key Features of Observability and DevTool Platforms

Session Tracking and Monitoring

These platforms offer comprehensive session tracking capabilities, allowing developers to monitor AI agent interactions, including LLM calls, costs, latency, and errors. This feature is crucial for understanding the performance and behavior of AI agents in real time.

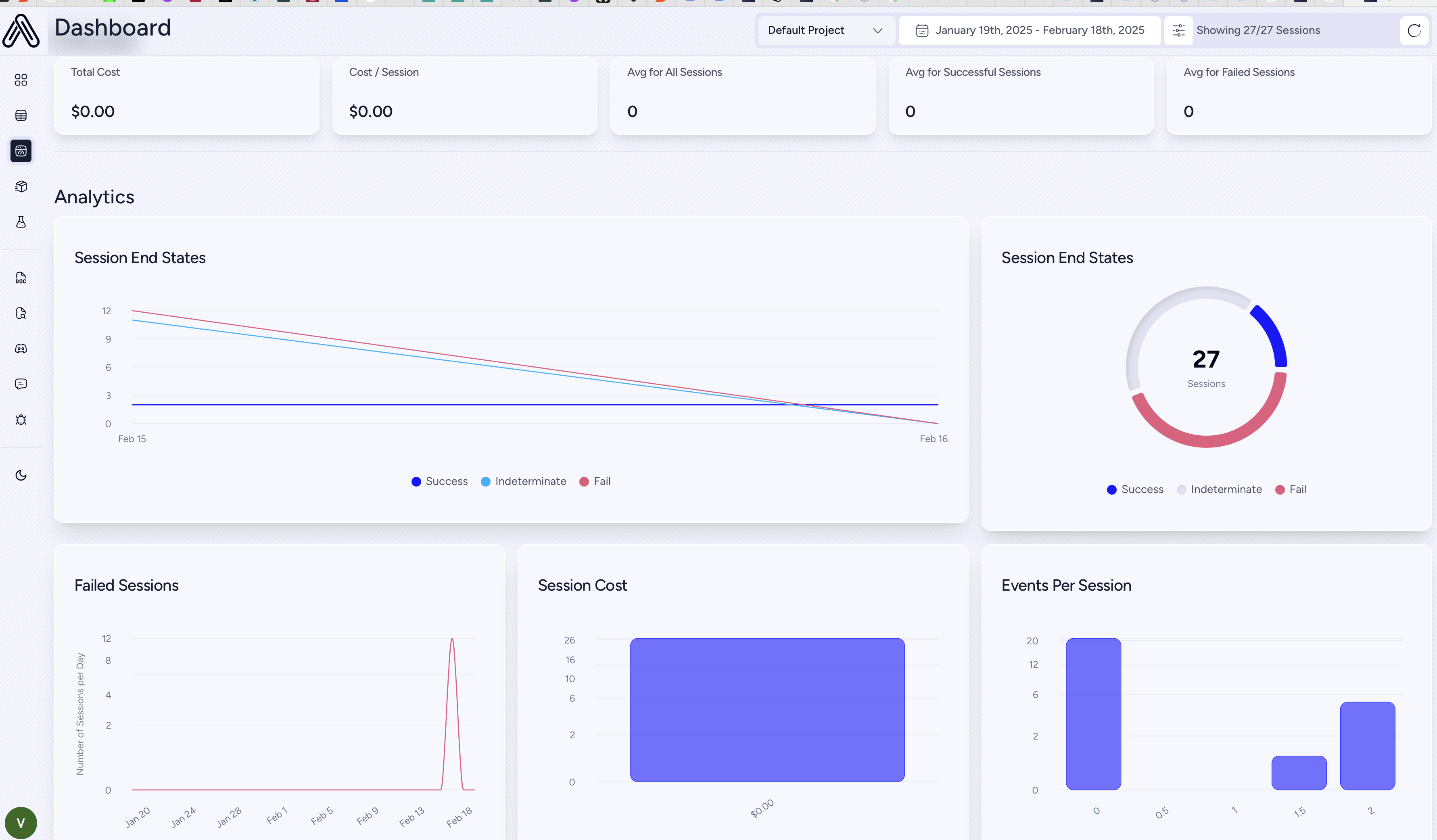

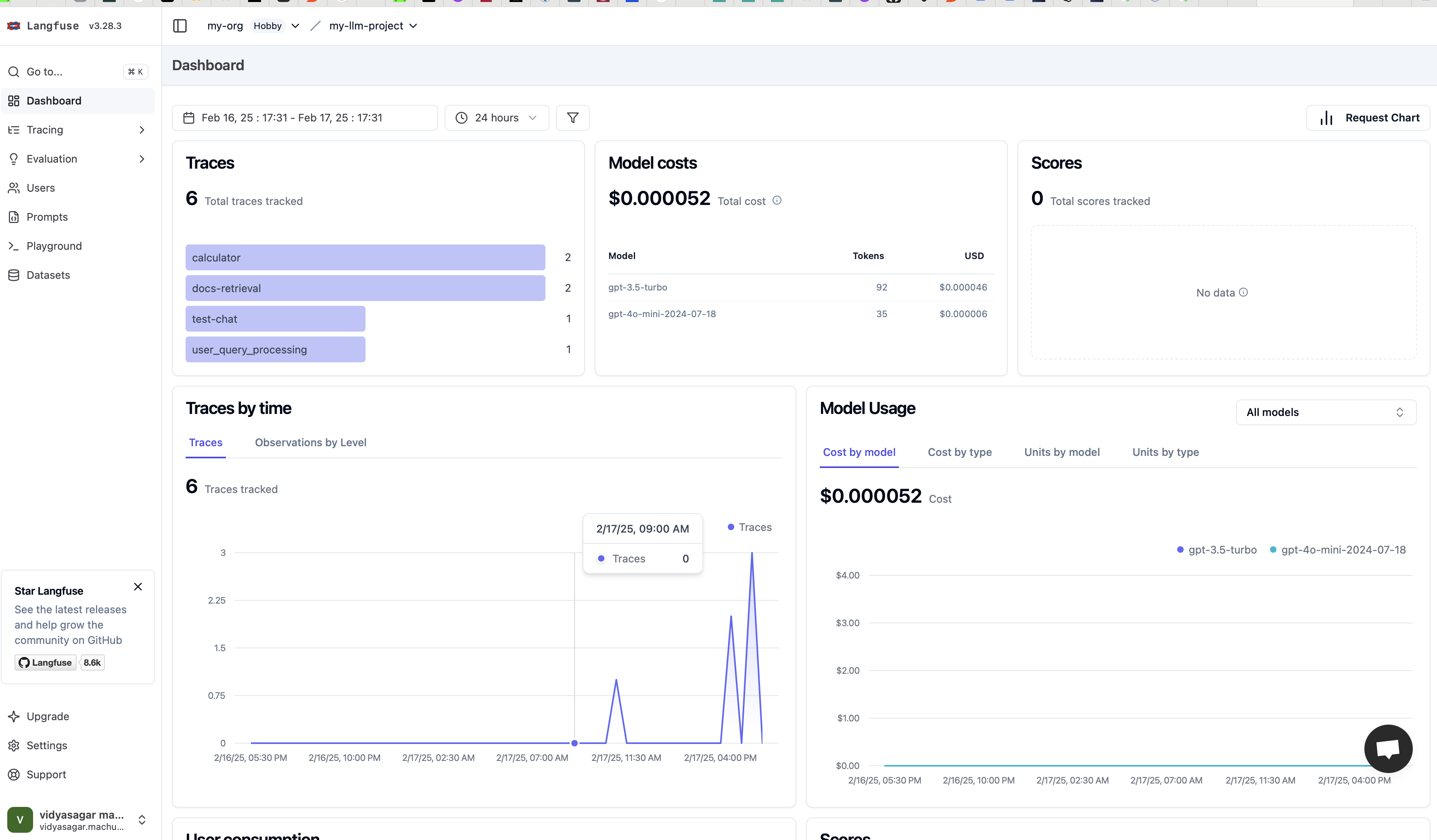

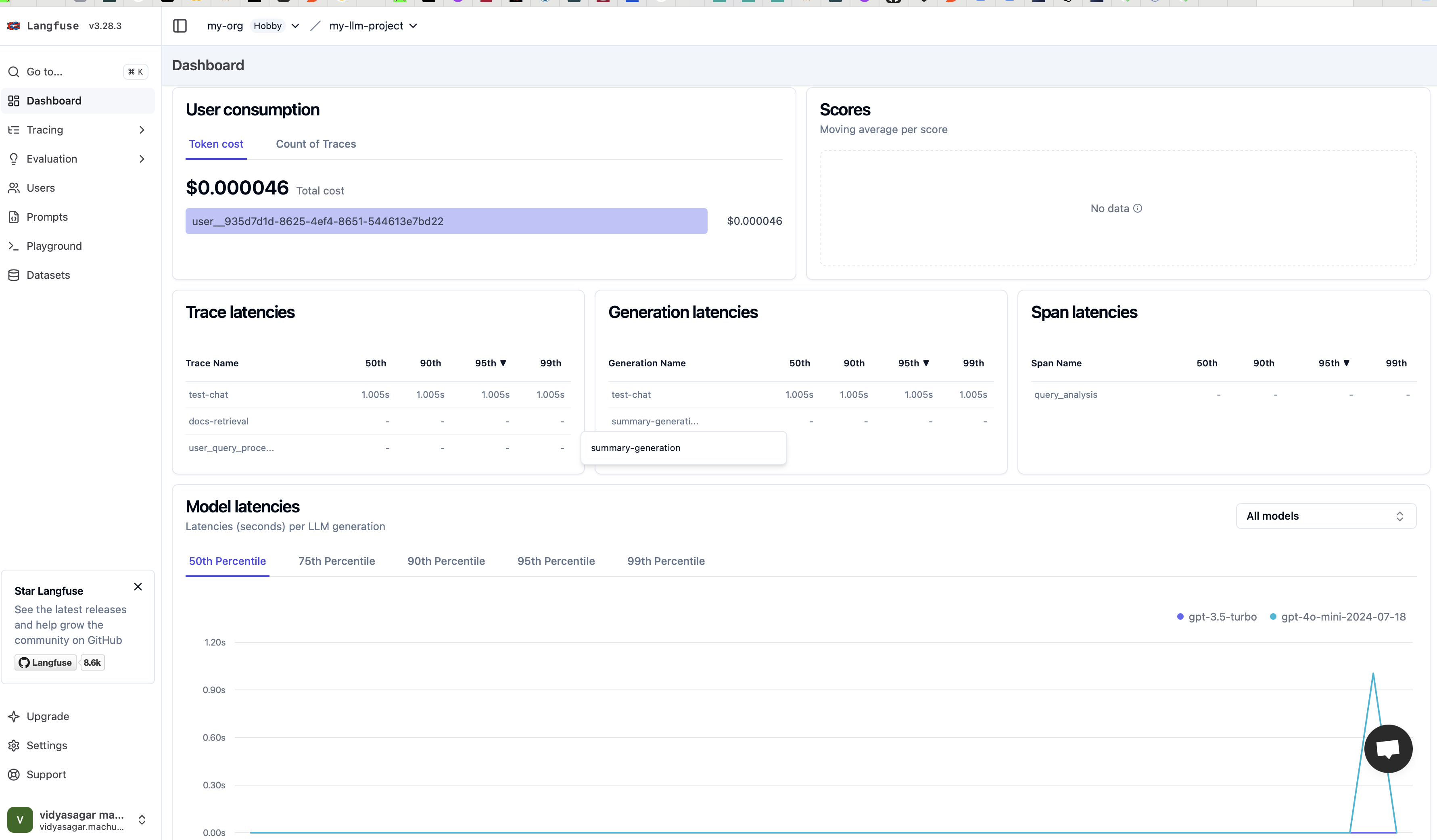

Analytics Dashboard

Observability platforms typically include analytics dashboards that display high-level statistics about agent performance. These dashboards provide insights into cost tracking, token usage analysis, and session-wide metrics.

Debugging Tools

Advanced debugging features like “Session Waterfall” views and “Time Travel Debugging” allow developers to inspect detailed event timelines and restart sessions from specific checkpoints.

Compliance and Security

Security features are built into these platforms to detect potential threats such as profanity, PII leaks, and prompt injections. They also provide audit logs for compliance tracking.

Code Examples

Let’s look at some code examples to illustrate how these platforms can be integrated into AI agent development.

Integrating AgentOps for Session Tracking

AgentOps helps developers build, evaluate, and monitor AI agents from prototype to production.

Check the AgentOps Quick Start guide to start using AgentOps. This step guides you in creating an AGENTOPS_API_KEY. We use Autogen, a multi-agent framework that helps build AI applications.

Check the complete code with the required files in the GitHub repository here.

import json

import os

import agentops

from agentops import ActionEvent

from autogen import ConversableAgent, config_list_from_json

from dotenv import load_dotenv

load_dotenv()

def calculator(a: int, b: int, operator: str) -> int:

if operator == "+":

return a + b

elif operator == "-":

return a - b

elif operator == "*":

return a * b

elif operator == "https://dzone.com/":

return int(a / b)

else:

raise ValueError("Invalid operator")

session = agentops.init(api_key=os.getenv("AGENTOPS_API_KEY"))

# Initialize AgentOps session

session.record(ActionEvent("llms"))

session.record(ActionEvent("tools"))

# Configure the assistant agent

assistant = ConversableAgent(

name="Assistant",

system_message="You are a helpful AI assistant.",

llm_config={"config_list": config_list_from_json("OAI_CONFIG_LIST")}

)

# Register the calculator tool

assistant.register_for_llm(name="calculator", description="Performs basic arithmetic")(calculator)

# Use the assistant

response = assistant.generate_reply(messages=[{"content": "What is 5+3?", "role": "user"}])

print(json.dumps(response, indent=4))

# End AgentOps session

session.end_session(end_state="Success")

In this example, we integrate AgentOps to track a session where an AI assistant uses a calculator tool. AgentOps will monitor the interactions, tool usage, and performance metrics throughout the session.

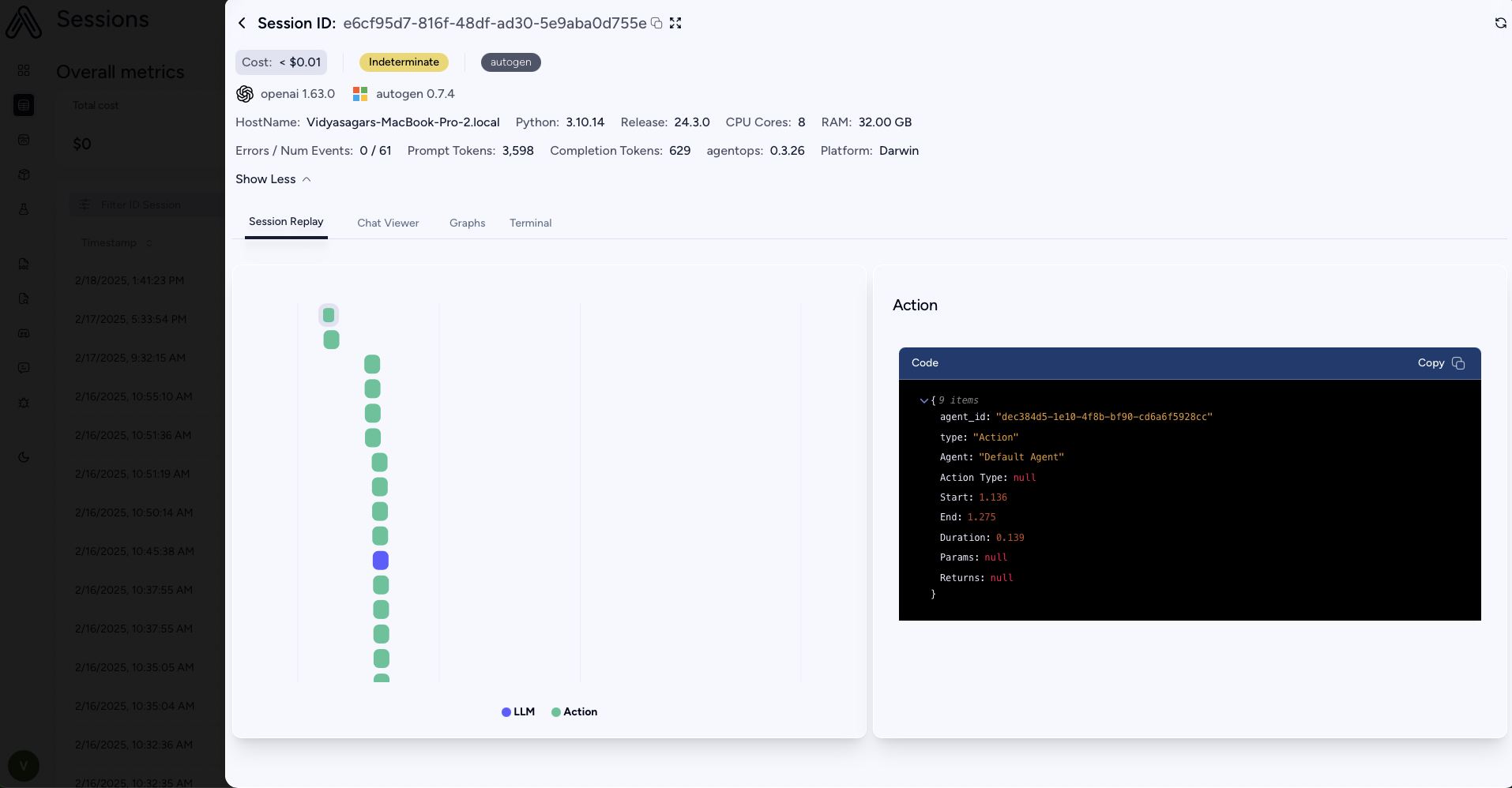

After providing all the credentials in the .env and OAI_CONFIG_FILE files following the instructions in the README.md file here and running the Python code, you should see a link to the AgentOps dashboard. Click on the link to see the dashboard with the metrics.

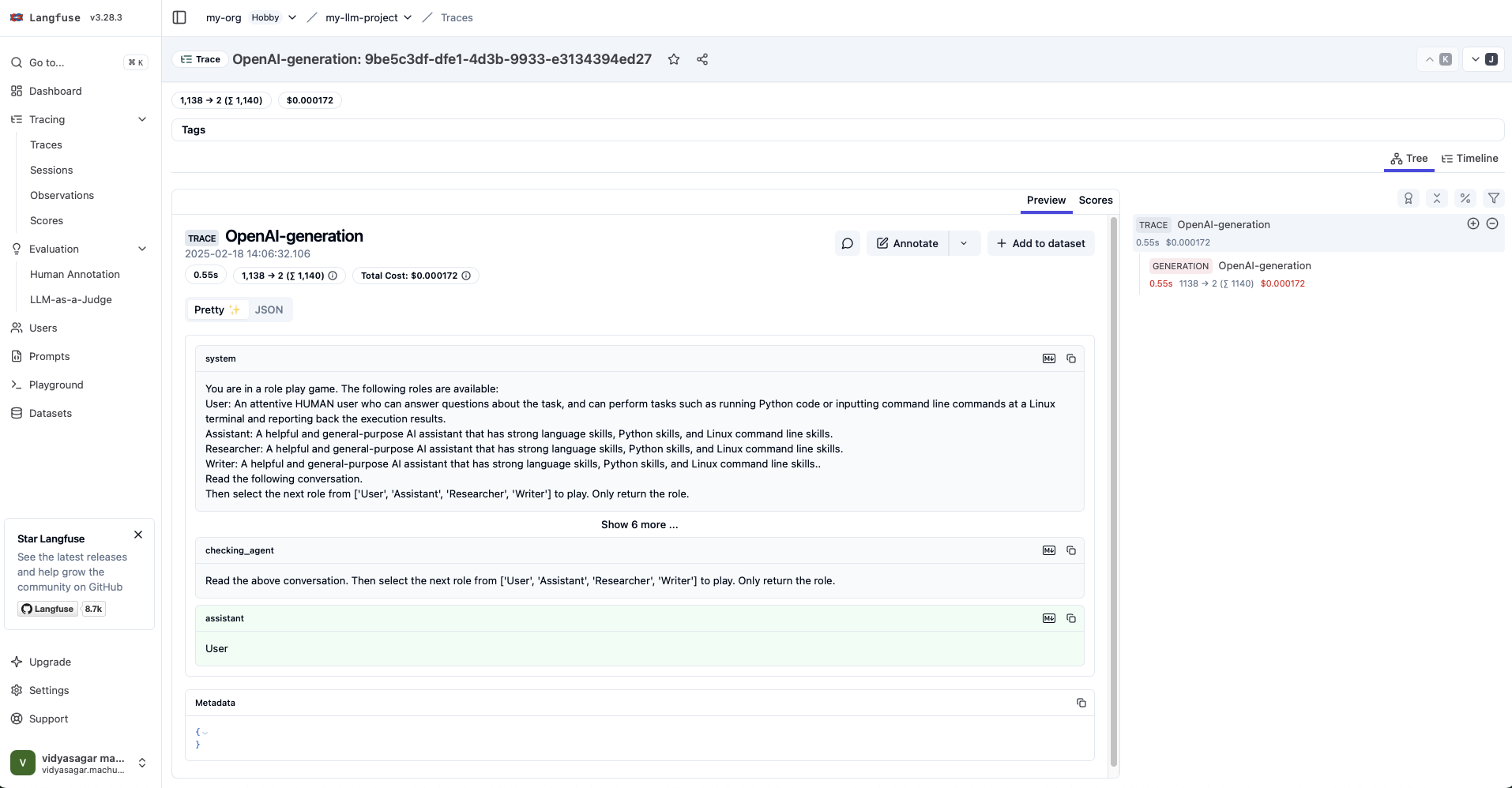

Using Langfuse for Tracing and Debugging

Langfuse is an open-source LLM engineering platform. It helps teams collaboratively develop, monitor, evaluate, and debug AI applications. Langfuse can be self-hosted in minutes and is battle-tested. With Langfuse, you can ingest Trace data from OpenTelemetry instrumentation libraries like Pydantic Logfire.

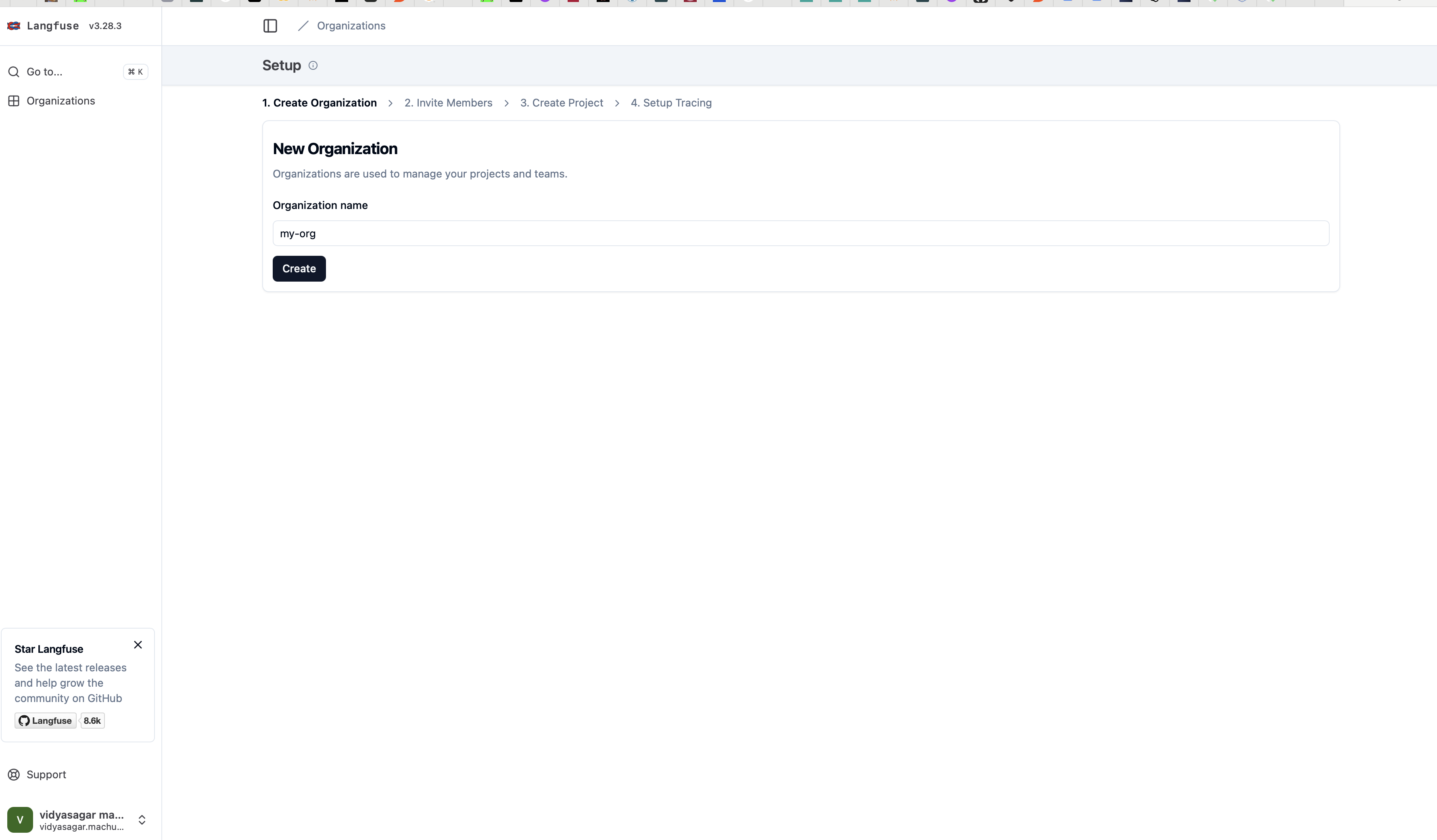

Before running the code sample below,

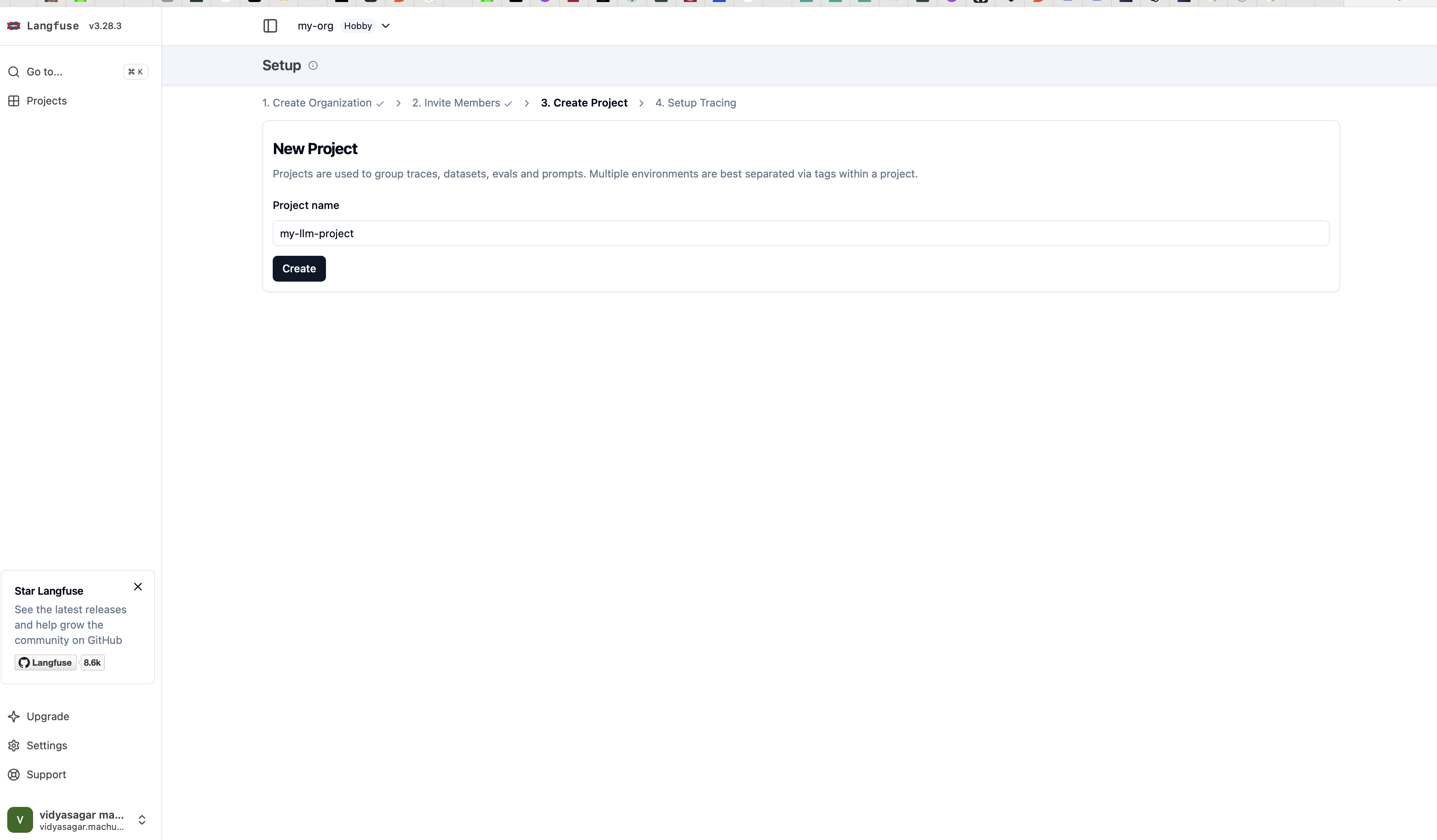

- Create a Langfuse account or self-host

- Create a new project

- Create new API credentials in the project settings

For this example, we are using the low-level SDK of Langfuse.

Langfuse project creation page

import os

from langfuse.openai import openai

from langfuse import Langfuse

from dotenv import load_dotenv

load_dotenv()

langfuse = Langfuse(

public_key=os.getenv("LANGFUSE_PUBLIC_KEY"),

secret_key=os.getenv("LANGFUSE_SECRET_KEY")

)

trace = langfuse.trace(

name="calculator"

)

openai.langfuse_debug = True

output = openai.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system","content": "You are a very accurate calculator. You output only the result of the calculation."},

{"role": "user", "content": "1 + 1 = "}],

name="test-chat",

metadata={"someMetadataKey": "someValue"},

).choices[0].message.content

print(output)

This example demonstrates how to use Langfuse to trace and debug AI agent interactions. It creates a trace for processing a user query and uses spans to measure the time taken for different steps in the process.

Implementing Multi-Agent System With AutoGen

from autogen import AssistantAgent, UserProxyAgent, GroupChat, config_list_from_json, GroupChatManager

import agentops

from agentops import ActionEvent

import os

from dotenv import load_dotenv

from langfuse import Langfuse

from langfuse.openai import openai

load_dotenv()

session = agentops.init(api_key=os.getenv("AGENTOPS_API_KEY"))

# Initialize AgentOps session

session.record(ActionEvent("multi-agent"))

session.record(ActionEvent("autogen"))

llm_config = {"config_list": config_list_from_json("OAI_CONFIG_LIST")}

# Create agents

langfuse = Langfuse(

public_key=os.getenv("LANGFUSE_PUBLIC_KEY"),

secret_key=os.getenv("LANGFUSE_SECRET_KEY")

)

trace = langfuse.trace(

name="multi-agent"

)

openai.langfuse_debug = False

user_proxy = UserProxyAgent(name="User")

assistant = AssistantAgent(name="Assistant", llm_config=llm_config)

researcher = AssistantAgent(name="Researcher", llm_config=llm_config)

writer = AssistantAgent(name="Writer", llm_config=llm_config)

# Create a group chat

agents = [user_proxy, assistant, researcher, writer]

groupchat = GroupChat(agents=agents, messages=[], max_round=12)

manager = GroupChatManager(groupchat=groupchat, llm_config=llm_config)

# Start the conversation

user_proxy.initiate_chat(

manager,

message="Write a comprehensive report on the impact of AI on job markets."

)

# End AgentOps session

session.end_session(end_state="Success")This example showcases a multi-agent system using AutoGen. It creates multiple specialized agents (Assistant, Researcher, and Writer) to collaborate on a complex task. Observability platforms can be used to monitor the interactions between these agents and optimize their performance.

You can see the AgentOps session with the below tabs:

- Session Replay

- Chat Viewer

- Graphs

- Terminal

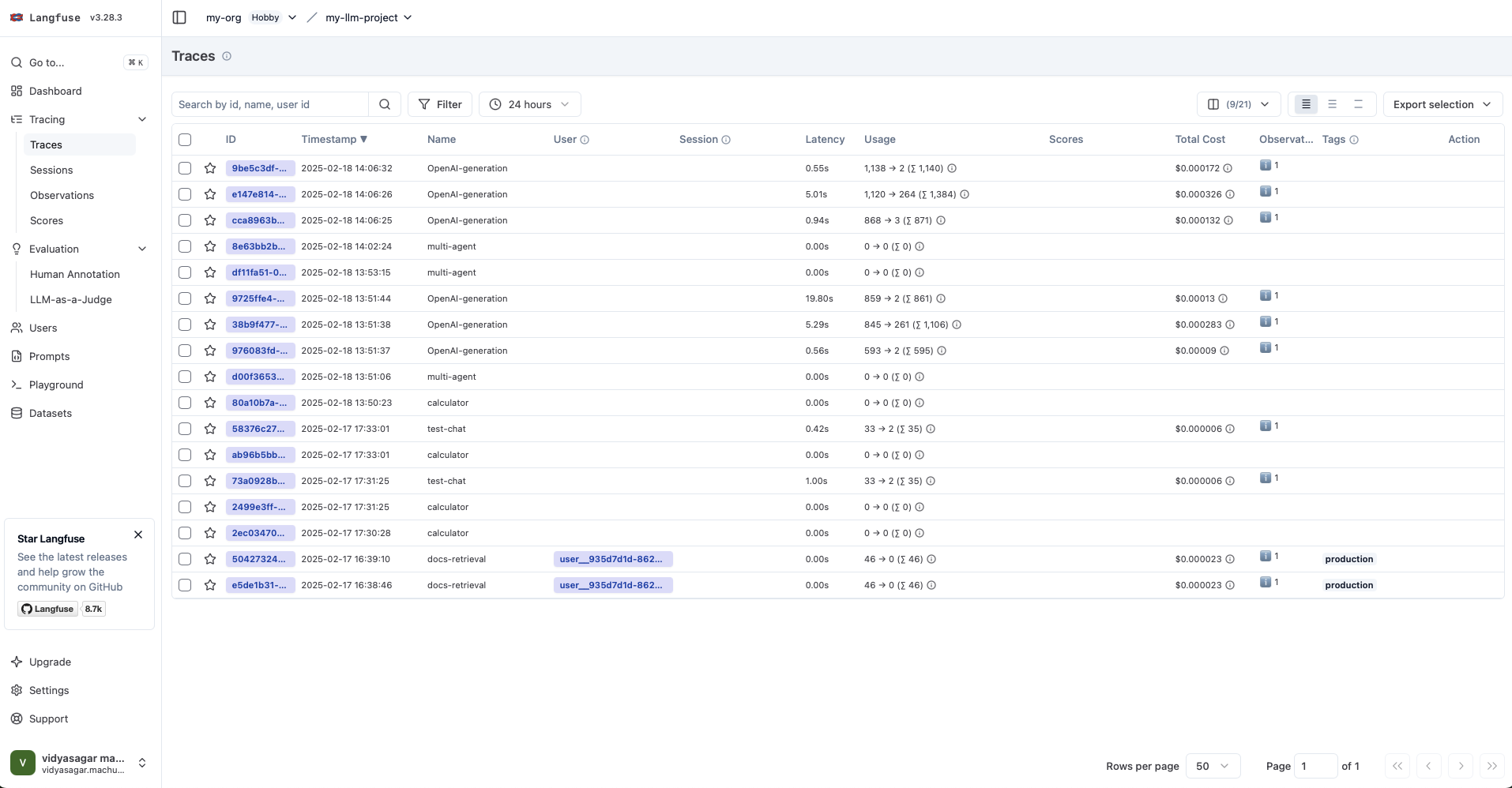

Here’s the LangFuse session:

Conclusion

Observability and DevTool platforms are transforming the way we develop and manage AI agents. They provide unprecedented visibility into AI operations, enabling developers to create more reliable, efficient, and trustworthy systems. As AI evolves, these tools will play an increasingly crucial role in shaping the future of intelligent, autonomous systems.

Using platforms like AgentOps, Langfuse, and AutoGen, developers can gain deep insights into their AI agents’ behavior, optimize performance, and ensure compliance with security and ethical standards. As the field progresses, we can expect these tools to become even more sophisticated, offering predictive capabilities and automated optimizations to further enhance AI agent development and deployment.