Amazon Web Services (AWS) has extended the reach of its generative artificial intelligence (AI) agents for developers into the realm of application testing.

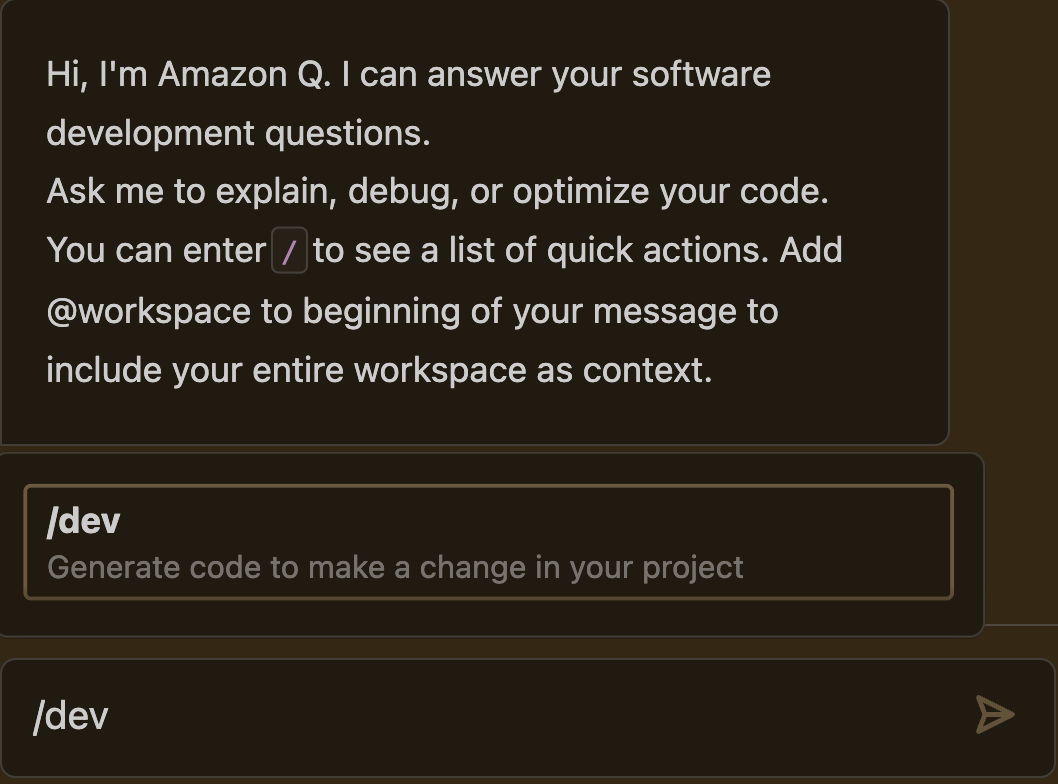

An update to Amazon Q Developer now makes it possible for agents to now build and test code in real-time to validate any update from within the preferred integrated developer environment (IDE) a developer has adopted.

Srini Iragavarapu, director of generative AI applications and developer experiences for AWS, said by moving beyond code generation the cloud service provider is now applying generative AI technologies in a way that ultimately improves the quality of the code that finds its way into a production environment.

Additionally, because it is now simpler to generate a test, more of the code being created is likely to have been tested early in the development lifecycle, he added.

For example, a developer can via a natural language request ask the Amazon Q Developer agent to add a checkout feature to an e-commerce application. The agent then analyzes the existing codebase, and makes all necessary code changes and tests within minutes, including running any unit tests and building the code to verify the code is ready for review.

Additionally, AWS is making use of the automated reasoning capabilities of large language models (LLMs) to verify the quality of the code being produced, noted Iragavarapu.

Collectively, those approaches enable developers to, on their own, resolve issues long before code is added to a larger codebase, he added.

The integration between Amazon Q Developer and the IDE is enabled by DevFile, a formatting tool that many developers already use to customize the configuration of their workspaces. Application developers can then specify DevFile commands that assign specific tasks to Amazon Q Developer agents, including the creation and running of tests in an isolated sandbox environment.

AWS is also working toward integrating the AI agents it is making across the entire software development lifecycle via alliances with partners such as GitLab to enable DevOps teams to better coordinate their efforts using AWS log data, said Iragavarapu. The goal is to enable DevOps teams to use AI agents to test software at any stage of the development lifecycle, he added.

While the amount of code being generated by application development teams has increased dramatically since the advent of AI coding tools, many developers are finding it challenging to debug code they didn’t initially write themselves. AWS is now in effect addressing that issue by making use of an AI agent to test code that was either created by a human or another AI agent.

The overall goal is to significantly increase the amount of code created by AI agents that is accepted by the developers tasked with building an application, said Iragavarapu.

It remains to be seen how AI agents will be integrated into DevOps workflows, but it’s not more a question of to what degree rather than if. Application developers, in their self-interests, are going to rely on these tools to reduce the number of instances where they have to revisit code they created weeks earlier and have since lost a lot of the context regarding how it was constructed. Hopefully, that will also reduce the number of times DevOps engineers are now required to ask those same developers why a particular bottleneck or error ever existed in the first place.