AI is now common in all software development lifecycles, particularly in application design, testing, and deployment. However, the growing presence of such systems necessitates ensuring that they serve instead of acting against human values. Misalignment of Artificial Intelligence Agents could lead to unintended consequences such as ethical breaches, discrimination in decision-making, or abuse of certain capabilities.

Understanding AI Alignment

AI Alignment, or value alignment, refers to the process or philosophy where the aims of the AI systems are made to be compatible or at least possible to coexist with other human aims and actions. As AI technology continues to develop, AI may turn out to be self-destructive or act against human beings, which makes the need to invest in AI ethics even more pressing.

Risks of Misaligned AI Agents

AI systems that are not aligned with human values have the potential to commit extreme damage. People should be concerned about an AI system chasing goals without its ethical side. There is a possibility such an AI system would do jobs well, but its actions would be horrible, leading to making inappropriate choices, invading privacy, and damaging social values. These weaknesses must be addressed, so AI designers must consider ethics first.

Reinforcement Learning from Human Feedback (RLHF)

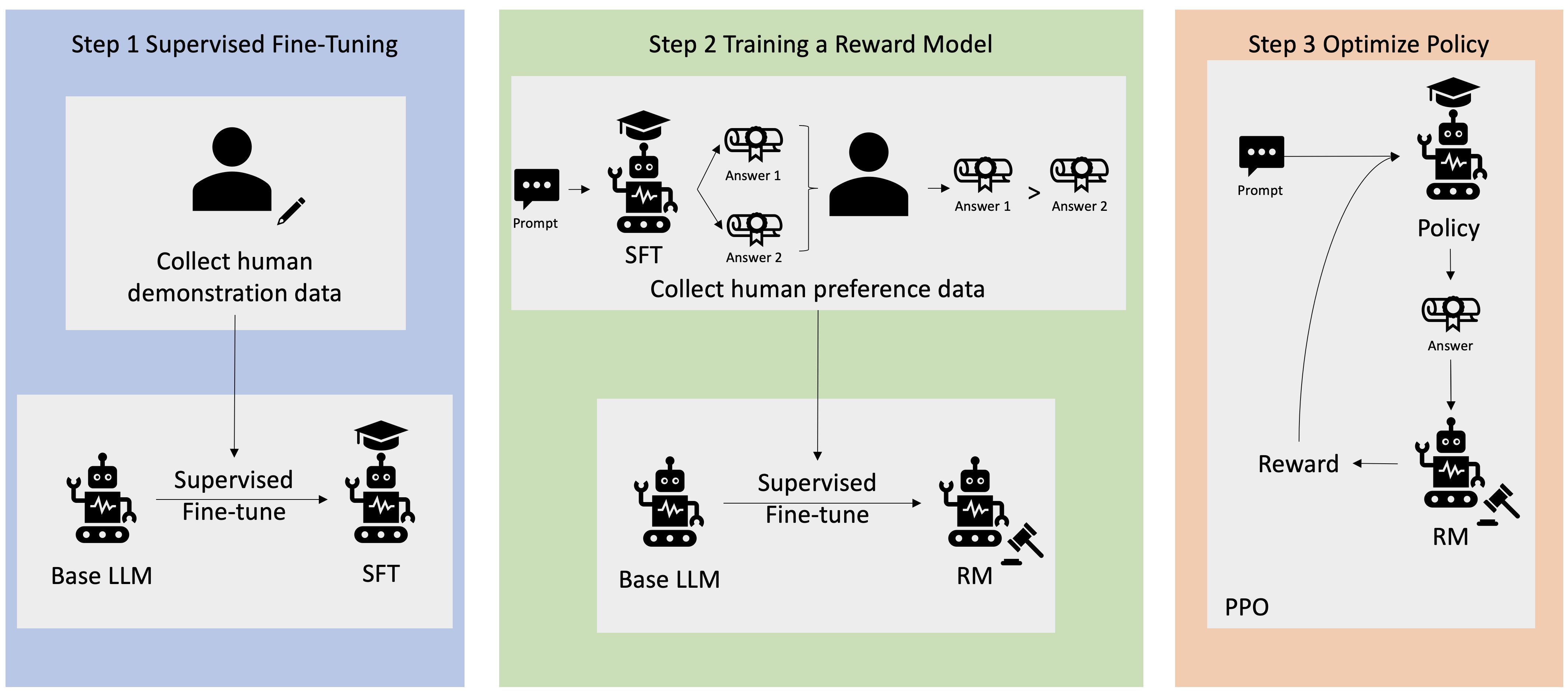

One of the most recent advances in AI techniques is Reinforcement Learning from Human Feedback (RLHF). It is a human-enhanced machine-learning method that specifies a human teacher for the model, especially when the reward function is complex or ill-defined. This method would enhance the way AI systems work, making them more sophisticated, pertinent, and enjoyable to use, which would improve the engagement and interaction between the human and the AI.

Implementation Steps for Developers

Step 1: Pretraining a Language Model (LM)

Start by training the language model on the traditional objectives it has been designed for, building a strong foundational understanding.

Step 2: Gathering Data and Training a Reward Model

Obtain human input to the model’s output to create a reward model around the goal aimed for and the expected outcomes of activities.

Step 3: Fine-Tuning the LM With Reinforcement Learning

Utilize the reward model to enhance the language model’s performance via reinforcement learning and thus shift the language model’s behavior graph closer to humans.

Incorporating External Knowledge

Modern AI systems should incorporate external knowledge to enhance their autonomous operations while aligning with human ethical standards. AI technologies ensure that agents make fruitful decisions and perform ethical actions as well, and the actions are efficient due to having up-to-date and relevant information access, which helps uphold moral standards and integrity.

Methods for Integrating External Data Sources

- Retrieval-Augmented Generation (RAG): RAG allows GPT models to retrieve and incorporate specific knowledge from external documents, enabling dynamic and context-aware decision-making.

- Knowledge graphs: Organized networks of entities and their relationships provide AI with contextual understanding, enhancing reasoning and decision-making.

- Ontology-based data integration: Ontologies define structured categories and relationships, helping AI integrate and interpret multi-domain information while reducing semantic friction.

Improving AI Performance Through Structured External Knowledge

- Updated access with relevance: Integrating data within AI ensures the agents are not acting on stale information even if the situation is fluid.

- Mistake minimization: Enrolling additional data makes it easier to comprehend the environments, so the chances of making mistakes are highly reduced, and the quality of AI-generated output information is enhanced.

- Ethical fittings: External ethics and standard operating procedures can be incorporated by AI systems to align their functions with good ethical principles and requirements.

Challenges in AI Alignment

The greatest problem in AI is the problem of aligning the AI systems’ values with humans. Addressing this challenge would necessitate further improvements, particularly in minimizing biases inherent in human cognition and overcoming the constraints of external information sources accessible to AI models.

Bias in Human Feedback

Human feedback is essential for training AI models, and Reinforcement Learning from Human Feedback (RLHF) is an especially effective technique. However, this input may include biases caused by individual subjectivity, cultural backgrounds, or inadvertent variables, potentially harming AI performance.

Limitations of External Knowledge Sources

Integrating external knowledge into AI systems can improve decision-making by offering new data. However, issues arise when this data is out of date, partial, or wrong, potentially leading to incorrect reasoning. Furthermore, processing and interpreting massive volumes of disorganized external data might be difficult. As a result, steps to ensure the quality and reliability of external information must be implemented before it is included in AI systems.

Best Practices for Ethical AI Development

There is a need to develop specific measures incorporating human feedback and other measures to enhance transparency and accountability to build AI systems that are in sync with the tenets of humanity.

Strategies for Effective Human Feedback Integration

- Structured feedback mechanisms: Perform regular activities to obtain user feedback, guiding the AI in performing its activities. This can be achieved through surveys, online test sessions, and interaction history.

- Diversity of feedback sources: Collect feedback from as many users as possible when employing AI technology so that bias is minimized and representation is enhanced.

- Iterative development: Follow an agile approach so AI models are trained and retrained based on user feedback, so AI Agents evolve based on the user’s needs.

Ensuring Transparency and Accountability

Transparency and accountability in AI development are critical to public trust and ethical integrity. Explainable AI (XAI) approaches help stakeholders understand how AI systems work, decision-making processes, and monitoring procedures.

Accountability and auditing need comprehensive documentation of dataset properties, model designs, and training resources. Regular ethical assessments are required to detect and correct biases or unethical practices, ensuring AI systems are responsible, transparent, and in line with human values.

Conclusion

AI alignment involves the joint efforts of developers, ethical experts, legal authorities, and other appropriate stakeholders to realize the creation of AI systems that are designed for and tractable to the people. As the field of Artificial intelligence systems is becoming more inclusive, it is critical to always consider human-centric ethical dilemmas and build transparency as an enabler.