A recent McKinsey article entitled ‘Yes, you can measure software developer productivity‘ has got the software developer community talking. And the general consensus is they’re not happy.

Most software developers are not enthusiastic about the prospect of having their individual performance metrics monitored. “Anyone who attempts to measure software in some sort of scientific way is just doing the wrong thing,” said YouTube personality ThePrimeTime. Dave Farley of Continuous Delivery even goes as far as to call the McKinsey report “harmful dribble.”

This debate arises as developer productivity engineering (DPE) is gaining traction as a means to improve developer performances and, perhaps, in response to employment landscape shifts like the Great Resignation, which saw developers and DevOps pros leaving their roles. Companies like Spotify, LinkedIn, Netflix and others are also investing in internal developer platforms (IDPs) to increase developer productivity and satisfaction amid a limited talent supply.

Alongside these ambitions, much has been written about how managers can measure developer productivity in terms of qualitative metrics, like developer satisfaction, as well as quantitative metrics, like number of commits or story points, deployment frequency or failure rates. But can tracking such metrics deliver tangible results? Or do they go too far?

Below, we’ll consider whether you can actually measure software developer productivity. But more than that, we’ll evaluate whether this is a meaningful tactic with desirable outcomes.

Ways to Measure Development Productivity: DORA

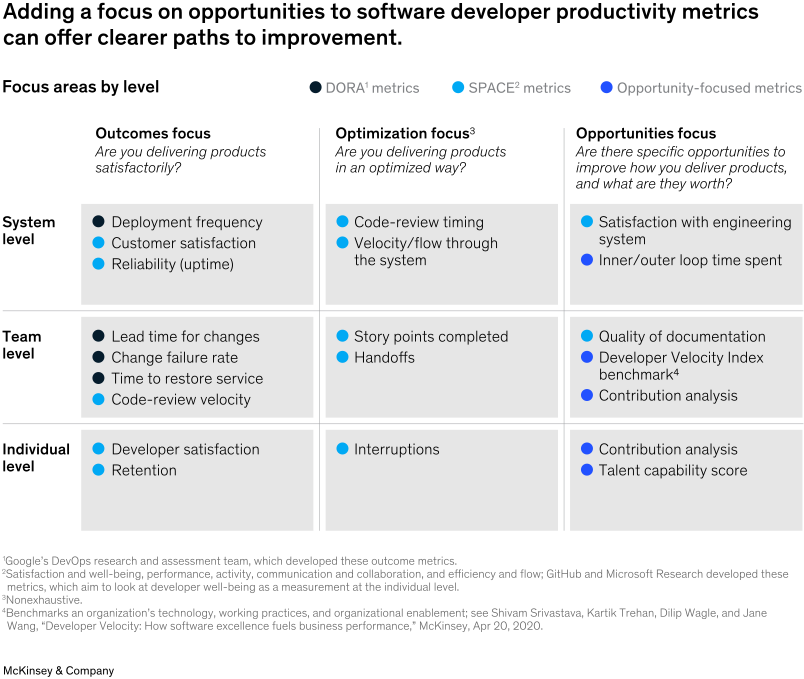

First, let’s look at some of the standard techniques to measure development productivity. The DORA metrics, produced by the DevOps Research and Assessment (DORA) team at Google after years of research, identified four key metrics:

- Deployment frequency: How often you ship changes.

- Lead time for changes: The time between a commit and it reaching production.

- Change failure rate: The percent of releases that fail; downtime, worsened service or rollbacks.

- Time to restore service: The average time it takes to respond to an issue, also known as mean-time-to-recovery.

These metrics could be helpful indicators of a software development team’s overall agility and health. They can signal bottlenecks in the CI/CD process or highlight where too much time is spent remediating issues, encouraging positive changes. Solving these root causes could all improve a team’s productivity.

Going Beyond DORA: SPACE

DORA also added reliability to the list. That’s understandable, given the interest in building reliable systems. But things start to get a little more complex when SPACE enters the equation.

Proposed in a 2021 paper by GitHub researchers, SPACE augments DORA with the following attributes:

- Satisfaction and well-being

- Performance

- Activity

- Communication and collaboration

- Efficiency and flow

Metrics like satisfaction or communication and collaboration are pretty subjective. Plus, it can be challenging to gather this data and encourage engineers to speak honestly in surveys. Measuring the activity of individual members, like the number of issued closed or pull requests made, isn’t exactly tied to end value and could even produce a negative psychological effect.

“Consequently, teams can become fixated on them, as seemingly positive changes are relatively easy to achieve,” wrote James Walker. He adds that SPACE still doesn’t tell you why measurements have value—furthermore, it lacks actionable information and might be tricky to implement, given developer pushback against performance analysis systems.

Opportunity-Focused Metrics

The McKinsey report adds to the mix what it calls “opportunity-focused productivity metrics,” which include inner/outer loop time spent, a Developer Velocity Index benchmark, contribution analysis and a talent capability score. (It’s not totally clear how these metrics can be computed). It then organizes all these metrics into a framework that further segments measurements into system, team and individual levels.

While some believe in the framework’s legitimacy, many commentators don’t see the practicality of implementing such a framework in practice. “Introducing the kind of framework that McKinsey is proposing is wrong-headed and certain to backfire,” wrote the PragmaticEngineer.com. “The report is so absurd and naive that it makes no sense to critique it in detail,” wrote Ken Beck.

Things to Avoid With Developer Productivity Metrics

Apparently, developers are very weary of being measured. And given the recent backlash, it seems like overreliance on productivity metrics could negatively impact the engineering culture. So, engineering leadership should be careful when implementing these sorts of tactics. Here are some anti-patterns to consider.

Don’t make it about individual performances. Metrics like DORA have become standards for measuring the efficiency of software teams, but they shouldn’t be applied at the individual level. “Measuring software development in terms of individual developer productivity is a terrible idea,” said Dave Farley. Technology development is more of a collaborative process; performance metrics should reflect how the team operates.

Don’t measure developer productivity in isolation. On that note, performance metrics shouldn’t be judged in isolation. Issues with failures, for example, often depend on contexts outside of an individual developer’s control. At the same time, it can be hard to correlate individual developer contributions to end customer satisfaction.

Don’t create an opportunity to gamify productivity systems. Leadership shouldn’t directly tie a developer’s salary, reputation or position to whether or not they hit certain metrics. If productivity systems carry this much weight, they could become gamefied.

Don’t put in more effort than it’s worth. In tech, where there’s hype, there’s money to be made—and a host of vendors ready to hawk monitoring tools. What’s more, to enact these productivity measurement enhancements, McKinsey noted that “most companies’ tech stacks will require potentially extensive reconfiguration.” Devoting many engineering resources to monitor engineers just seems like a big pill to swallow.

Don’t put too much faith in metrics. Lastly, metrics only show one side of the picture. They are only data points and can’t always depict what it’s really like on the ground. Furthermore, qualitative responses also might be skewed due to reluctance to speak up.

Remember Goodhart’s Law and Focus On Quality

Personally, I believe that measuring things like developer productivity, efficiency, experience and satisfaction does have some merit for some businesses. Most teams are now working asynchronously, and metrics help leaders take the pulse of a team’s performance, even if they are all remote workers.

It’s also nothing new. Leadership has been attempting to measure developer productivity for years. But, these metrics often lack context and come with many caveats to consider. Thus, they shouldn’t be given a huge seat at the table.

I like this ‘law’ from British economist Charles Goodhart, which is colloquially summarized as “when a measure becomes a target, it ceases to be a good measure.” In other words, when you start working for the metrics, the engineering culture and overall code quality might decline. This matters, since a separate study found that increases in code quality tend to be followed by an increase in developer productivity.

So, focus first on establishing code quality and, hopefully, productivity will flow.