Web scraping has become an indispensable tool in today’s data-driven world. Python, one of the most popular languages for scraping, has a vast ecosystem of powerful libraries and frameworks. In this article, we will explore the best Python libraries for web scraping, each offering unique features and functionalities to simplify the process of extracting data from websites.

This article will also cover the best libraries and best practices to ensure efficient and responsible web scraping. From respecting website policies and handling rate limits to addressing common challenges, we will provide valuable insights to help you navigate the world of web scraping effectively.

Scrape-It.Cloud

Let’s start with the Scrape-It.Cloud library, which provides access to an API for scraping data. This solution has several advantages. For instance, we do it through an intermediary instead of directly scraping data from the target website. This guarantees we won’t get blocked when scraping large amounts of data, so we don’t need proxies. We don’t have to solve captchas because the API handles that. Additionally, we can scrape both static and dynamic pages.

Features

With Scrape-It.Cloud library, you can easily extract valuable data from any site with a simple API call. It solves the problems of proxy servers, headless browsers, and captcha-solving services.

By specifying the right URL, Scrape-It.Cloud quickly returns JSON with the necessary data. This allows you to focus on extracting the right data without worrying about it being blocked.

Moreover, this API allows you to extract data from dynamic pages created with React, AngularJS, Ajax, Vue.js, and other popular libraries.

Also, if you need to collect data from Google SERPs, you can also use this API key for the serp api python library.

Installing

To install the library, run the following command:

pip install scrapeit-cloud

To use the library, you’ll also need an API key. You can get it by registering on the website. Besides, you’ll get some free credits to make requests and explore the library’s features for free.

Example of Use

A detailed description of all the functions, features, and ways to use a particular library deserves a separate article. For now, we’ll just show you how to get the HTML code of any web page, regardless of whether it’s accessible to you, whether it requires a captcha solution, and whether the page content is static or dynamic.

To do this, just specify your API key and the page URL.

from scrapeit_cloud import ScrapeitCloudClient

import json

client = ScrapeitCloudClient(api_key="YOUR-API-KEY")

response = client.scrape(

params={

"url": "https://example.com/"

}

)Since the results come in JSON format, and the content of the page is stored in the attribute ["scrapingResult"]["content"], we will use this to extract the desired data.

data = json.loads(response.text)

print(data["scrapingResult"]["content"])As a result, the HTML code of the retrieved page will be displayed on the screen.

Requests and BeautifulSoup Combination

One of the simplest and most popular libraries is BeautifulSoup. However, keep in mind that it is a parsing library and does not have the ability to make requests on its own. Therefore, it is usually used with a simple request library like Requests, http.client, or cUrl.

Features

This library is designed for beginners and is quite easy to use. Additionally, it has well-documented instructions and an active community.

The BeautifulSoup library (or BS4) is specifically designed for parsing, which gives it extensive capabilities. You can scrape web pages using both XPath and CSS selectors.

Due to its simplicity and active community, numerous examples of its usage are available online. Moreover, if you encounter difficulties while using it, you can receive assistance to solve your problem.

Installing

As mentioned, we will need two libraries to use it. For handling requests, we will use the Requests library. The good news is that it comes pre-installed, so we don’t need to install it separately. However, we do need to install the BeautifulSoup library to work with it. To do this, simply use the following command:

pip install beautifulsoup4Once it’s installed, you can start using it right away.

Example of Use

Let’s say we want to retrieve the content of the <h1> tag, which holds the header. To do this, we need first to import the necessary libraries and make a request to get the page’s content:

import requests

from bs4 import BeautifulSoup

data = requests.get('https://example.com')To process the page, we’ll use the BS4 parser:

soup = BeautifulSoup(data.text, "html.parser")Now, all we have to do is specify the exact data we want to extract from the page:

text = soup.find_all('h1')Finally, let’s display the obtained data on the screen:

As we can see, using the library is quite simple. However, it does have its limitations. For instance, it cannot scrape dynamic data since it’s a parsing library that works with a basic request library rather than headless browsers.

LXML

LXML is another popular library for parsing data, and it can’t be used for scraping on its own. Since it also requires a library for making requests, we will use the familiar Requests library that we already know.

Features

Despite its similarity to the previous library, it does offer some additional features. For instance, it is more specialized in working with XML document structures than BS4. While it also supports HTML documents, this library would be a more suitable choice if you have a more complex XML structure.

Installing

As mentioned earlier, despite needing a request library, we only need to install the LXML library, as the other required components are already pre-installed.

To install LXML, enter the following command in the command prompt:

Now let’s move on to an example of using this library.

Example of Use

To begin, just like last time, we need to use a library to fetch the HTML code of a webpage. This part of the code will be the same as the previous example:

import requests

from lxml import html

data = requests.get('https://example.com')Now we need to pass the result to a parser so that it can process the document’s structure:

tree = html.fromstring(data.content)

Finally, all that’s left is to specify a CSS selector or XPath for the desired element and print the processed data on the screen. Let’s use XPath as an example:

data = tree.xpath('//h1')

print(data)As a result, we will get the same heading as in the previous example:

However, although it may not be very noticeable in a simple example, the LXML library is more challenging for beginners than the previous one. It also has less well-documented resources and a less active community.

Therefore, using LXML when dealing with complex XML structures that are difficult to process using other methods is recommended.

Scrapy

Unlike previous examples, Scrapy is not just a library but a full-fledged framework for web scraping. It doesn’t require additional libraries and is a self-contained solution. However, for beginners, it may seem quite challenging. If this is your first web scraper, it’s worth considering another library.

Features

Despite its shortcomings, this framework is an invaluable solution in certain situations. For example, when you want your project to be easily scalable. Or, if you need multiple scrapers within the same project with the same settings, you can run consistently with just one command and efficiently organize all the collected information into the right format.

A single scraper created with Scrapy is called a spider and can either be the only one or one of many spiders in a project. The project has its own configuration file that applies to all scrapers within the project. In addition, each spider has its own settings, which will run independently of the settings of the whole project.

Installing

You can install this framework like any other Python library by entering the installation command in the command line.

Now let’s move on to an example of using this framework.

Example of Use

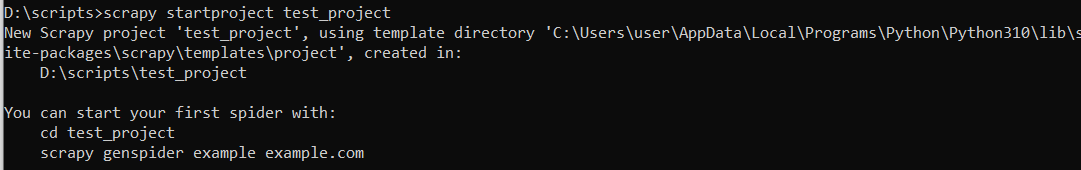

Creating a project, just like a spider file, is done with a special command, unlike the library examples. It has to be entered at the command line.

To begin, let’s create a new project where we’ll build our scraper. Use the following command:

scrapy startproject test_project

Instead of test_project you can enter any other project name. Now we can navigate to our project folder or create a new spider right here.

Before we move on to creating a spider, let’s look at our project tree’s structure.

The files mentioned here are automatically generated when creating a new project. Any settings specified in these files will apply to all spiders within the project. You can define common classes in the “items.py” file, specify what to do when the project is launched in the “pipelines.py” file, and configure general project settings in the “settings.py” file.

Now let’s go back to the command line and navigate to our project folder:

After that, we’ll create a new spider while being in the folder of the desired project:

scrapy genspider example example.com

Next, you can open the spider file and manually edit it. The genspider command creates a framework that makes it easier to build your scraper. To retrieve the page’s title, go to the spider file and find the following function:

def parse(self, response):

passReplace pass with the code that performs the necessary functions. In our case, it involves extracting data from the h1 tag:

def parse(self, response):

item = DemoItem()

item["text"] = response.xpath("//h1").extract()

return itemsAfterward, you can configure the execution of the spiders within the project and obtain the desired data.

Selenium

Selenium is a highly convenient library that not only allows you to extract data and scrape simple web pages but also enables the use of headless browsers. This makes it suitable for scraping dynamic web pages. So, we can say that Selenium is one of the best libraries for web scraping in Python.

Features

The Selenium library was originally developed for software testing purposes, meaning it allows you to mimic the behavior of a real user effectively. This feature reduces the risk of blocking during web scraping. In addition, Selenium allows collecting data and performing necessary actions on web pages, such as authentication or filling out forms.

This library uses a web driver that provides access to these functions. You can choose any supported web driver, but the Firefox and Chrome web drivers are the most popular. This article will use the Chrome web driver as an example.

Installing

Let’s start by installing the library:

Also, as mentioned earlier, we need a web driver to simulate the behavior of a real user. We just need to download it and put it in any folder to use it. We will specify the path to that folder in our code later on.

You can download the web driver from the official website. Remember that it is important to use the version of the web driver that corresponds to the version of the browser you have installed.

Example of Use

To use the Selenium library, create an empty *.py file and import the necessary libraries:

from selenium import webdriver

from selenium.webdriver.common.by import ByAfter that, let’s specify the path to the web driver and define that we’ll be using it:

DRIVER_PATH = 'C:\chromedriver.exe'

driver = webdriver.Chrome(executable_path=DRIVER_PATH)Here you can additionally specify some parameters, such as the operating mode. The browser can run in active mode, where you will see all your script’s actions. Alternatively, you can choose a headless mode, in which the browser window is hidden and is not displayed to the user. The browser window is displayed by default so that we won’t change anything.

Now that we’re done with the setup, let’s move on to the landing page:

driver.get("https://example.com/")At this point, the web driver will start, and your script will automatically go to the desired web page. Now we just have to specify what data we want to retrieve, display the retrieved data, and close the web driver:

text = driver.find_elements(By.CSS_SELECTOR, "h1")

print(text)

driver.close()It’s important not to forget to close the web driver at the end of the script’s execution. Otherwise, it will remain open until the script finishes, which can significantly affect the performance of your PC.

Pyppeteer

The last library we will discuss in our article is Pyppeteer. It is the Python version of a popular library called Puppeteer, commonly used in NodeJS. Pyppeteer has a vibrant community and detailed documentation, but unfortunately, most of it is focused on NodeJS. So, if you decide to use this library, it’s important to keep that in mind.

Features

As mentioned before, this library was originally developed for NodeJS. It also allows you to use a headless browser, which makes it useful for scraping dynamic web pages.

Installing

To install the library, go to the command line and enter the command:

Usually, this library is used together with the asyncio library, which improves script performance and execution speed. So, let’s also install it:

Other than that, we won’t need anything else.

Example of Use

Let’s look at a simple example of using the Pyppeteer library. We’ll create a new Python file and import the necessary libraries to do this.

import asyncio

from pyppeteer import launchNow let’s do the same as in the previous example: navigate to a page, collect data, display it on the screen, and close the browser.

async def main():

browser = await launch()

page = await browser.newPage()

await page.goto('https://example.com')

text = await page.querySelectorAll("h1.text")

print(await text.getProperty("textContent"))

await browser.close()

asyncio.get_event_loop().run_until_complete(main())Since this library is similar to Puppeteer, beginners might find it somewhat challenging.

Best Practices and Considerations

To make web scraping more efficient, there are some rules to follow. Adhering to these rules helps make your scraper more effective and ethical and reduces the load on the services you gather information from.

Avoiding Excessive Requests

During web scraping, avoiding excessive requests is important to prevent being blocked and reduce the load on the target website. That’s why gathering data from websites during their least busy hours, such as at night, is recommended. This can help decrease the risk of overwhelming the resource and causing it to malfunction.

Dealing with Dynamic Content

During the process of gathering dynamic data, there are two approaches. You can do the scraping yourself by using libraries that support headless browsers. Alternatively, you can use a web scraping API that will handle the task of collecting dynamic data for you.

If you have good programming skills and a small project, it might be better for you to write your own scraper using libraries. However, a web scraping API would be preferable if you are a beginner or need to gather data from many pages. In such cases, besides collecting dynamic data, the API will also take care of proxies and solving captchas, for example scrape it cloud serp api.

User-Agent Rotation

It’s also important to consider that your bot will stand out noticeably without using a User-Agent. Every browser has its own User-Agent when visiting a webpage, and you can view it in the developer console under the DevTools tab. It’s advisable to change the User-Agent values randomly for each request.

Proxy Usage and IP Rotation

As we’ve discussed before, there is a risk of being blocked when it comes to scraping. To reduce this risk, it is advisable to use proxies that hide your real IP address.

However, having just one proxy is not sufficient. It is preferable to have rotating proxies, although they come at a higher cost.

Conclusion and Takeaways

This article discussed the libraries used for web scraping and the following rules. To summarize, we created a table and compared all the libraries we covered.

Here’s a comparison table that highlights some key features of the Python libraries for web scraping:

|

Library |

Parsing Capabilities |

Advanced Features |

JS Rendering |

Ease of Use |

|

Scrape-It.Cloud |

HTML, XML, JavaScript |

Automatic scraping and pagination |

Yes |

Easy |

|

Requests and BeautifulSoup Combo |

HTML, XML |

Simple integration |

No |

Easy |

|

Requests and LXML Combo |

HTML, XML |

XPath and CSS selector support |

No |

Moderate |

|

Scrapy |

HTML, XML |

Multiple spiders |

No |

Moderate |

|

Selenium |

HTML, XML, JavaScript |

Dynamic content handling |

Yes (using web drivers) |

Moderate |

|

Pyppeteer |

HTML, JavaScript |

Browser automation with headless Chrome or Chromium |

Yes |

Moderate |

Overall, Python is a highly useful programming language for data collection. With its wide range of tools and user-friendly nature, it is often used for data mining and analysis. Python enables tasks related to extracting information from websites and processing data to be easily accomplished.

![Dropbox vs Tresorit Compared in 2023 [Best Cloud Storage]](https://devopsvault.io/wp-content/uploads/2023/08/Dropbox-vs-Tresorit-Compared-in-2023-Best-Cloud-Storage-75x75.png)